When Sensors Lie: Living With Drift, Decay, and False Confidence

- Srihari Maddula

- Jan 4

- 5 min read

One of the most uncomfortable moments in building real-world IoT systems is the moment you realize that the sensor is not wrong.

It’s just no longer telling the truth you expected.

At first, everything looks fine. The numbers line up with the datasheet. Lab tests pass. Early deployments look stable. Dashboards feel reassuring. Stakeholders gain confidence. The product moves forward.

And then, slowly, something begins to shift.

The values don’t jump. They drift. They don’t fail. They slide. They don’t break. They age.

At EurthTech, we’ve learned that sensors almost never announce their decline. They don’t raise errors or throw exceptions. They continue reporting data with the same calm confidence they always had — only now, the data means something slightly different.

This is where many IoT products begin to feel unreliable, even though nothing is technically “broken.”

Most teams encounter this for the first time months after deployment. Sometimes longer. A customer reports behaviour that feels inconsistent. An alert triggers too often in one location but never in another. A threshold that worked perfectly during pilots suddenly feels noisy. A model that once felt accurate starts producing uncomfortable edge cases.

The instinctive response is to look at software.

Maybe the firmware has a bug. Maybe the cloud pipeline is misbehaving. Maybe the ML model needs retraining. Maybe the network is introducing artefacts.

Sometimes that’s true.

But very often, the real cause is quieter: the sensor is doing exactly what physics allows it to do over time.

Datasheets create a dangerous illusion of permanence. Accuracy numbers look absolute. Drift figures look small. Environmental dependencies look manageable. In reality, most of those figures are measured under controlled conditions, over limited time windows, on fresh components.

Field deployments are not controlled. They are not gentle. And they are not kind to assumptions.

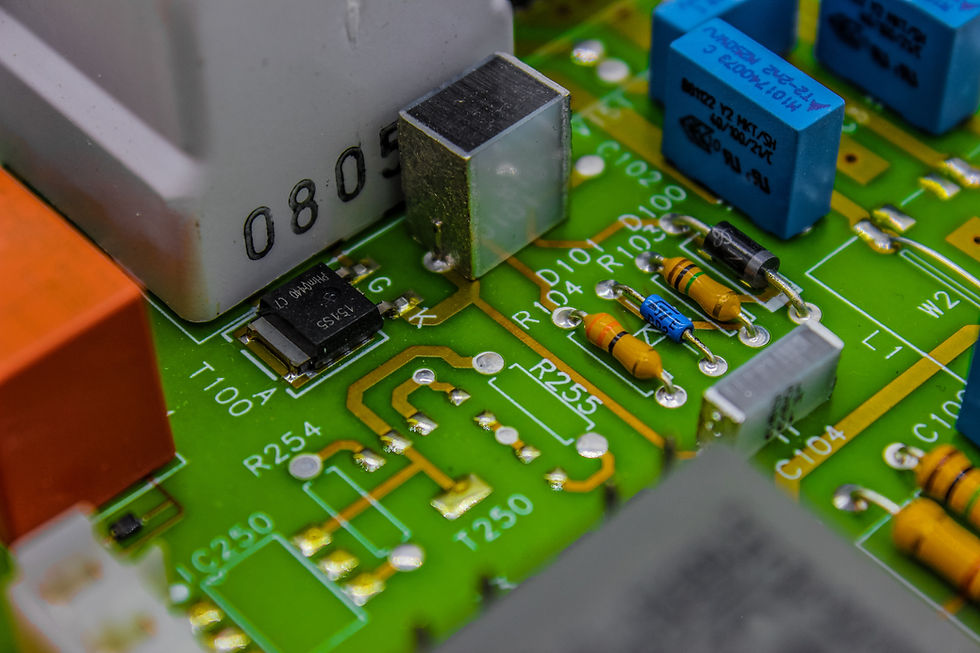

Temperature cycles expand and contract materials. Humidity seeps into places it shouldn’t. Mechanical stress accumulates invisibly. Dust, chemicals, and vibration do their slow work. Power rails fluctuate. Reference voltages wander.

The sensor doesn’t stop working. It just becomes a slightly different sensor.

We’ve seen temperature sensors that slowly read one degree high after long exposure to heat. Accelerometers whose noise floor increases enough to confuse anomaly detection. Gas sensors that become more sensitive to humidity than to the target compound. Pressure sensors that develop subtle hysteresis that never existed during calibration.

None of these issues show up during PoCs. They don’t even show up during early production.

They show up when the product has lived long enough to experience reality.

This is where confidence becomes dangerous.

Dashboards look precise. Numbers have decimals. Graphs are smooth. Alerts are crisp. It feels scientific. But precision is not the same as accuracy, and accuracy is not the same as truth.

One of the hardest lessons in sensor-based products is learning that data can be internally consistent and still wrong.

We’ve seen deployments where every device in a fleet drifted in roughly the same direction, maintaining relative consistency while becoming collectively inaccurate. From the cloud’s perspective, nothing looked broken. From the customer’s perspective, the system slowly lost trust.

This is why calibration is not a one-time event, even though it is often treated as one.

Initial calibration aligns a device with reality at a moment in time. It does nothing to guarantee alignment in the future.

In manufacturing, calibration often feels like a box to tick. Apply a known stimulus. Capture a response. Store a coefficient. Move on. It works well enough early on, especially when volumes are low and devices are young.

But over the life of a product, calibration becomes a strategy, not a step.

Some systems rely on periodic recalibration. Others rely on self-referencing techniques. Some use environmental correlation. Some use fleet-level statistical behaviour to identify outliers. Some quietly adapt thresholds rather than raw values. Some accept drift and design business logic around relative change instead of absolute measurement.

Each approach has trade-offs, and none of them are free.

We’ve seen systems where adding a simple field recalibration mechanism extended usable sensor life by years. We’ve also seen systems where the absence of any recalibration plan turned perfectly functional hardware into an operational liability within eighteen months.

The difference was not sensor quality. It was expectation management.

Edge AI often enters this story with the promise of salvation.

“If the sensor drifts, the model will learn,” the thinking goes.

Sometimes that’s true. More often, it’s optimistic.

Models trained on clean data don’t magically adapt to degraded inputs. They inherit bias. They amplify patterns. They can become very confident about very wrong conclusions. Without careful monitoring, ML can mask sensor decay rather than correct it.

We’ve seen anomaly detectors that slowly shifted their definition of “normal” as sensors aged, eventually normalizing failure conditions. The model didn’t break. It adapted. Unfortunately, it adapted in the wrong direction.

This is where edge intelligence must be paired with humility. Models need anchors. They need sanity checks. They need boundaries that say, “This data no longer makes sense, regardless of what the pattern suggests.”

In many systems, the most reliable indicator of sensor health is not the sensor itself, but its relationship with the rest of the fleet.

Fleet-level visibility changes how sensor truth is understood.

When hundreds or thousands of devices are deployed, patterns emerge that no single device can reveal. One unit drifts slightly. Another drifts differently. A third remains stable. Over time, clusters form. Outliers appear. Behavior diverges.

This is where sensor trust becomes statistical rather than absolute.

At EurthTech, we’ve seen teams regain confidence in noisy systems not by fixing individual sensors, but by learning how groups behave. Devices in similar environments should tell similar stories. When they don’t, something meaningful is happening — either in the environment or in the hardware.

This kind of thinking transforms how alerts, thresholds, and decisions are designed. It shifts the product from reacting to single readings to interpreting context.

That shift doesn’t make the system simpler. It makes it survivable.

From a business perspective, sensor drift rarely shows up as a line item. It shows up as friction.

Support tickets that feel vague. Customer complaints that are hard to reproduce. Engineering hours spent chasing “intermittent issues.” Model retraining cycles that feel too frequent. Confidence slowly eroding.

These costs accumulate quietly. By the time someone asks whether the sensor choice was right, the answer is no longer actionable.

This is why experienced teams treat sensor behavior as a lifecycle problem, not a specification problem. They ask how the sensor will behave after one year, three years, five years. They ask how failure will appear, not just whether it will occur. They design systems that degrade gracefully instead of collapsing suddenly.

Good sensor design does not eliminate lies. It makes lies detectable.

The uncomfortable truth is that no sensor tells the truth forever. All of them drift. All of them age. All of them respond to the world in ways that datasheets cannot fully describe.

The difference between fragile products and durable ones is not whether sensors lie. It’s whether the system expects them to.

At EurthTech, we’ve learned to treat sensor data not as facts, but as testimony. It is evidence, not truth. It must be corroborated, contextualized, and occasionally challenged.

When products are designed this way, something interesting happens. Confidence returns. Not the fragile confidence of clean numbers, but the quieter confidence that comes from understanding how the system behaves when reality intrudes.

That confidence doesn’t come from better sensors alone.It comes from better expectations.

Comments